AI coding: plateauing but also accelerating

Coding agents, non-coding agents, background coding agents, code fatigue, and other cool stuff

3 months ago I wrote that Nobody Codes Here Anymore. It feels like a good time for an update.1

Agents

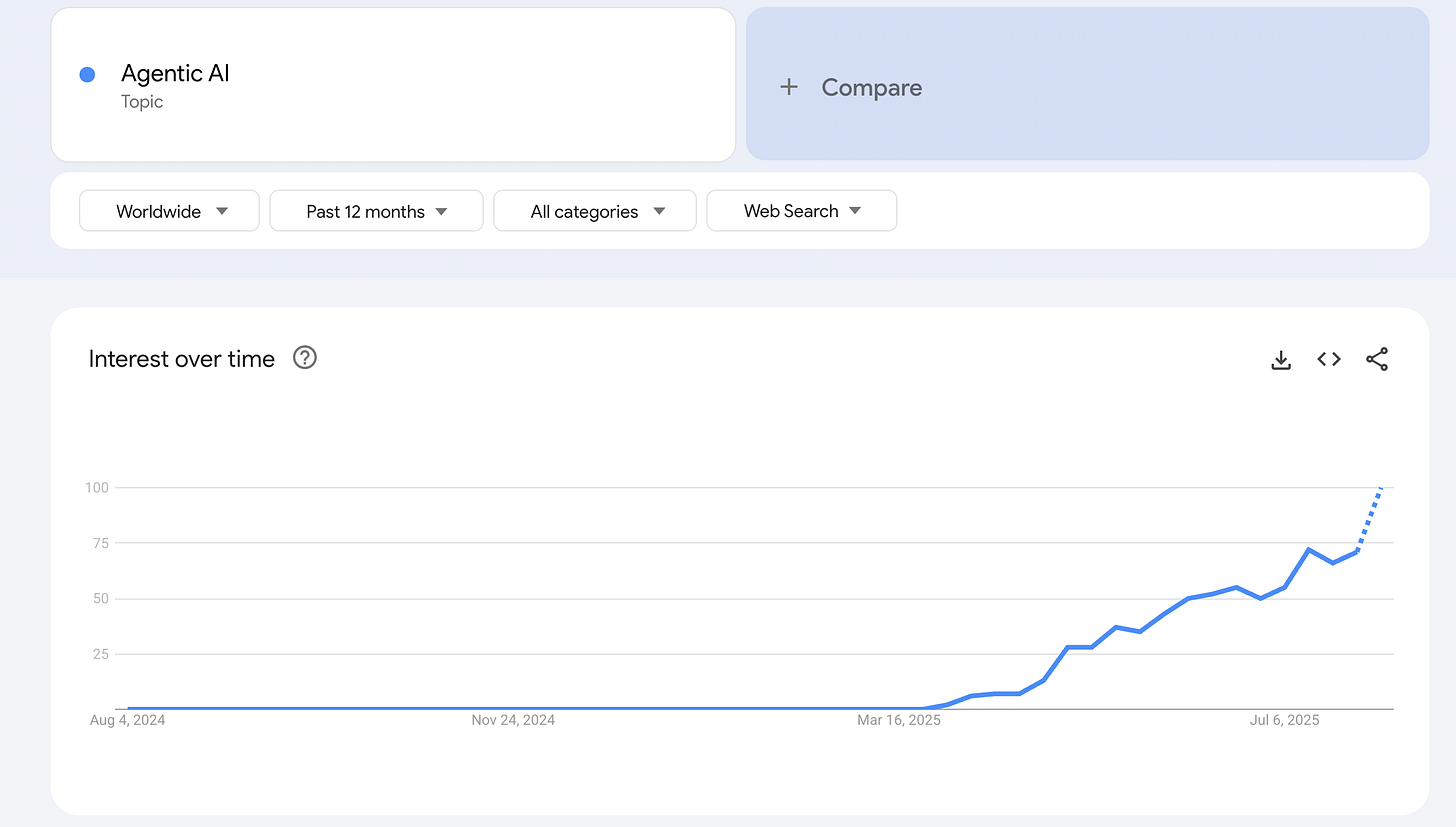

Everyone is talking about agents as of a few months ago. This isn’t new - Claude Code is a coding agent - but it took a bit longer for the LinkedIn generation to catch up.

Agents sounded smart and magical. They are - because LLMs are - but the concept of agents is a bit2 of extra tooling on top. My understanding of agents is that they combine three things that LLMs do well:

Selecting the best option from a set of available options.

Reading & writing code.

Reading and understanding text.

These are things LLMs are good at in general terms. They apply specifically well to coding agents, which really just follow this sequence repeatedly:

Read your prompt (Reading and understanding text).

Select from an available set of tools (ReadFile, WriteFile, Grep, Search, etc.) based on your prompt (Selecting the best option from a set of available options).

Read your code.

Write new code.

Run unit tests, and determine what needs to be done to make them pass (Reading and understanding text).

It wasn’t until I read How to Build an Agent (much less scary than it sounds) that I realised how simple they are, conceptually. There’s a lot of elbow grease in the details, but all the really smart bits are in the fact that the model is so good at those 3 tasks.

Coding Agents

A friend who works at a company whose product uses LLMs heavily3 tells me that the models they build on used to improve all the time. This made his job hard because he constantly needed to do new evals, but it was generally a good thing - a rising tide.

But since about mid 2024 that’s stopped. He speculates that’s when the model developers found product market fit in optimising the models for tool usage and coding, and stopped focusing on anything else. Their product isn’t a coding product, so for their use case, progress has plateaued.4

I agree with this theory. “AI” is getting better at coding, and it feels like it’s diverging from more general “AI” progress. But it’s not clear if the underlying models are getting better, or just that we wrap them better as coding agents now.

Where it gets even more confusing is that more functionality (or “tools”) is getting built on top of coding agents. Broadly this functionality is trying to codify techniques that have been found to be good ways of prompting an agent. A good example of this is the Claude Code Github Action. It is the king of code review, but it’s just a specialised prompt.

A less good example is Claude Code Subagents. The problem with Subagents is that, at least in our testing, they don’t work very well when you explicitly request them, and the models don’t use them when you don’t ask them to. This is similar to the findings here that suggest that models will prefer to just write the code themselves, rather than use tools they created.

Everyone’s talking about The Bitter Lesson, and I think the bitter lesson here will be that the vanilla version of the agent can write code without having specialised tools or agents crafted by humans (or by LLMs!) to try and guide the agent toward better-written code.

Cloud Based Background Coding Agents

I wanted to talk a little bit about this, because I feel like either I’m going mad or all the product managers in AI are.

I tried OpenAI Codex (the cloud version, not the CLI). The pitch of “get it working on some tasks and come back to working PRs” is so compelling. The implementation is so slow that it feels unworkable.

There are some tasks where you can one shot a prompt and it gets a working PR that does exactly the thing, and nothing else, and requires no further changes. But those tasks are not that common, at least not for me. Unfortunately if you need to make any changes via Codex it feels like it has to re-download & configure your entire environment to execute them. Or something like that. The feedback loop is just way too slow.

It’s a night and day difference to Claude Code. Which makes some sense; one’s local and one’s not. But it does make me wonder who is actually using these cloud based products successfully, or what I am missing.

I feel the same about worktrees, despite the Claude Code team really trying to encourage everyone to adopt them. I’d rather do one thing at a time and do it well, than have 5 things going concurrently and try to keep up with them all. When I tried to force myself to learn work trees, I ended up feeling like I needed to come up with some uninspiring maintenance tasks just to keep all my Claudes busy. Sometimes that’s actually what you need to do, but most of the time I’d rather focus on doing one thing well. Doing that one thing twice as fast thanks to a single Claude is enough of a win.

Background coding is in the thumbs down bucket today.

(This is distinct from running Claude Code in the background to make a plan, or to write some code. That still rules.)

Coding Agent Fatigue

I went through a phase where I was easily building & shipping new features every day. This was in a relatively new part of the codebase, where there were less existing patterns and it felt more okay to just adopt Claude’s coding style/architecture (still governed by our lint rules and Claude instructions). The features worked and were useful.

It started to get tiring shipping that much stuff, even though I wasn’t hand-writing every line of code, which was a strange phenomenon!

The code gets written faster, but you still need time to test if it works, absorb the finished product, ponder future directions, and decide on what to build next. None of these tasks have doubled in speed, but doing them more often is a new, different kind of mental work.

Agents for things that aren’t coding

All this is for coding. What about other realms?

AccountingBench is an experiment to teach LLMs to do bookkeeping. The main thing it learned was that models consistently become incoherent over a longer time horizon, even if they can complete discrete tasks well.

At a glance bookkeeping sounds like it should be pretty similar to coding in terms of things an LLM is good at. You still read text, you still select from the correct tool, and you’re allowed to write code to do it. But their results suggest that frontier LLMs are notably worse at bookkeeping than they are at coding.

Can you name successful software products that are “AI Agents for X”? Maybe like 2? This might change, maybe we’re still really early, but we’ve been “really early” for quite a while now, particularly given how much easier it is to build software.

Today:

I’m bullish on coding agents (due to us discovering new techniques, with model improvements as a cherry on top).

I’m not bullish on agents for other stuff.

I look forward to being proven entirely wrong.

Other stuff that’s cool

The Claude GitHub action is awesome for code review.

I’m now encouraging everyone to get a Claude Max subscription if they get rate limited on Pro. I’m not surprised this is a growing problem.5

We’re getting great outcomes setting up Claude Code for non-technical staff (eg. support teams, technical writers) and letting them use it to read the codebase and answer their own questions about functionality.

This comment is underrated. There is still a strong correlation between how well you understand (and can explain!) Claude’s output, and how effective your finished product is.

I’m getting more certain on the view that agentic programming is a skill in the same way that programming is. Just like you can be bad, average, good, or great at programming, so you can be at agentic programming. Just as there’s 10x engineers, so there will be 10x agentic engineers.

I spent about a week working on this post, between writing drafts, waiting for feedback, and pondering into the abyss. In that time there like 3 big new announcements that could, over the next 3 months, make everything I said here totally wrong.

But I’m betting they won’t, and hitting publish.

I’m writing this footnote the day that GPT 5 dropped, so it’s possible that everything I write here is wrong.

apologies to the geniuses that made them possible

in their product, not just in their investor briefings

Here’s a recent example that’s arguing something similar:

My hack for this is to live in a time zone that most Claude users don’t live in; we seem to get rate limited a lot less than the wider internet. It’s not the only reason to move to Australia.

I've had mixed results with worktrees. Working on a side project I got it to build 2 fairly sizeable chunks of work in parallel that I was happy with. And at Workforce. I got it to refactor 10 related classes all at the same time making a very similar change in all of them, and it oneshotted most of them. Kind of hard to fully utilize at work though as I can only have one dev server running at a time. So its only good for TDD stuff or stuff I can eye ball.

But yeah most of the time its easier and more efficient to let one claude go at a time

I really agree on this point: "The code gets written faster, but you still need time to test if it works, absorb the finished product, ponder future directions, and decide on what to build next. None of these tasks have doubled in speed, but doing them more often is a new, different kind of mental work." - I have also noticed this. I am an experienced developer, I use AI and still its taking me time to build a "fairly simple website". This is because there are so many things to consider other than purely writing code. Where to put the code, how to reuse parts of code to make everything more efficient, what features to put where on the site to drive engagement etc. AI really helps me get things done but it isn't doing all these things for me. Not by a long way.